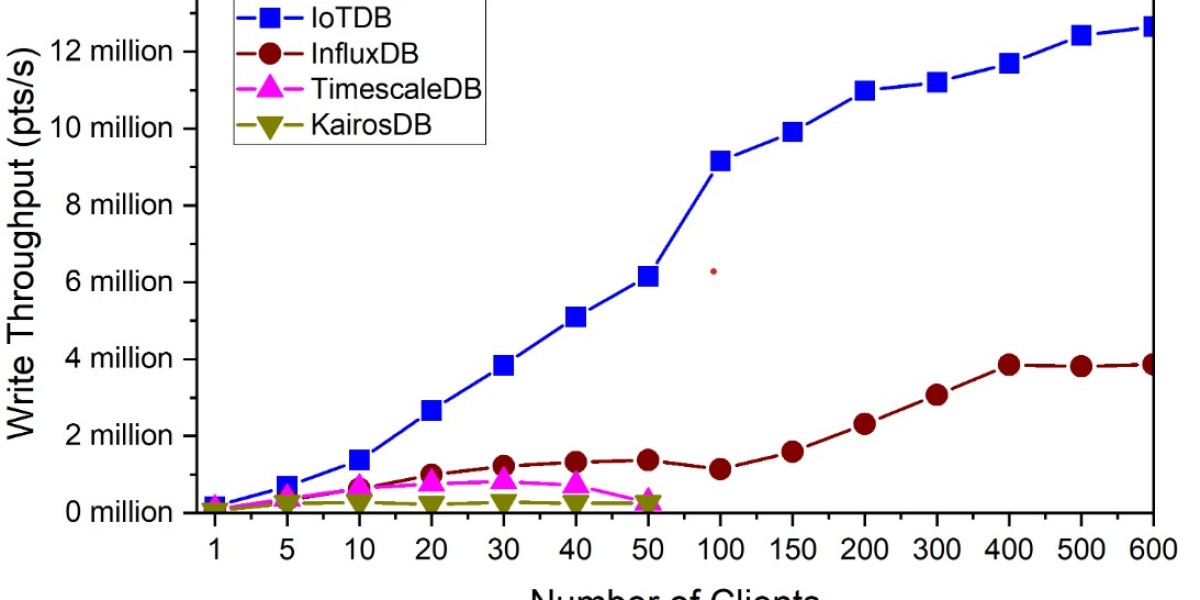

Real-time data analytics has become essential in industries that rely on continuous metrics such as energy production, logistics, finance, and manufacturing. As organizations look for the best tools to handle fast data workloads, real-time queries have become a defining capability of many time series systems. When examining performance and flexibility across options, an open source time series database comparison makes it easier to understand how different implementations handle continuous writes, updates, and query operations. Most solutions aim to reduce query latency, support high write throughput, and provide long-term data retention without degrading access speed. However, their underlying storage models, compression methods, and indexing strategies often lead to noticeable differences in real-time behavior.

Time series systems are designed to store event data that changes at short and predictable intervals. For example, a device may send temperature, CPU usage, or power output every few seconds. The results stack up quickly, which is why efficient storage and compression are so important. Most real-time workloads often use batch-style ingestion for speed, while still allowing queries within seconds of arrival. Beyond raw performance, users also consider how easy it is to scale horizontally or vertically, whether queries can be run against historical data without long delays, and whether the system supports downsampling to reduce storage costs. A balanced approach between write performance and read performance is usually the deciding factor for real-time use cases.

At the heart of the discussion is how each time series database tsdb manages indexing. Indexing determines how quickly a system can locate relevant data for a query. Some implementations rely on time-based indexes, while others use multi-dimensional approaches that mix tags, labels, or metadata with timestamps. Multi-dimensional indexing improves filtering, since users can request data by measurement type, unit, or device ID. However, it also increases the cost of updates and requires more metadata storage. Retention policies also play a key role. Systems that keep uncompressed raw data for longer periods may perform better in high-resolution queries but worse in storage usage. Likewise, systems that aggressively compress or downsample data may excel in cost efficiency but reduce query precision on older records.

Query execution strategies vary significantly as well. Some systems use streaming execution paths, allowing queries to be performed on data that is still in memory buffers, reducing delay but increasing memory consumption. Others focus on columnar storage layouts, which compress similar data together and enable faster scanning for numeric calculations. In real-time dashboards or monitoring tools, users typically prioritize low-latency reads, especially when evaluating metrics like peak loads, anomaly detection, or alert triggering. To serve these needs, some systems internally maintain cache layers or indexed summary tables that reduce query scanning time.

Scaling considerations are equally important. For small deployments, a single-node architecture might be enough to handle both ingestion and queries simultaneously. But larger deployments—such as those in telecom or high-volume IoT—often demand clustering. Clustering allows data to be distributed across multiple nodes and improves fault tolerance, but it introduces new questions: How is data sharded across nodes? Does the system rebalance automatically? What happens when nodes fail? Real-time performance depends on how quickly replanning happens during node changes and how well the cluster handles write backpressure.

From a software engineering perspective, real-time query performance is usually a trade-off between memory usage, CPU efficiency, and storage structure. Some development teams optimize for minimal resource usage so the system can run on edge devices or small servers. Others optimize for speed to support analytics workloads with massive datasets. The configuration model also matters. Query planners benefit when users define data retention, aggregation rules, or index hints that guide how data is organized. Without such rules, the system may rely on probabilistic or general-purpose indexing that works well in small workloads but slows down under heavy load.

The last set of comparisons usually focuses on flexibility and interoperability. Users want real-time analytics systems that integrate well with dashboards, automation tools, and user-defined workloads. Lightweight query languages help developers interact with data in a natural way, especially when performing complex calculations on moving time windows. Some solutions provide built-in forecasting or statistical features, while others rely on external tools. Extensibility matters for real-time operational pipelines where new metrics appear frequently and schemas must adapt without downtime.

In modern workloads, real-time queries are also benchmarked against streaming ingestion and long-term query performance. For example, engineers often compare how quickly a system can run a large multi-hour aggregation against historical data while simultaneously accepting millions of new inserts per minute. The ability to maintain predictable performance under mixed workloads differentiates more advanced architectures from basic ones. In this context, many users evaluate how well a system like a tsdb elasticsearch-style implementation handles distributed indexing, metadata filtering, and cluster replication under peak loads.

Overall, real-time query performance across time series systems depends on careful trade-offs in storage design, indexing approach, memory usage, and clustering strategy. As organizations continue to adopt continuous monitoring and automation practices, choosing the right architecture for real-time analytics becomes essential to support future growth and operational efficiency.